Why is flows per second a flawed way to measure a netflow collector’s capability?

Flows-per-second is often considered the primary yardstick to measure the capability of a netflow analyzer’s flow capture (aka collection) rate.

This seems simple on its face. The more flows-per-second that a flow collector can consume, the more visibility it provides, right? Well, yes and no.

The Basics

NetFlow was originally conceived as a means to provide network professionals the data to make sense of the traffic on their network without having to resort to expensive per segment based packet sniffing tools.

A flow record contains at minimum the basic information pertaining to a transfer of data through a router, switch, firewall, packet tap or other network gateway. A typical flow record will contain at minimum: Source IP, Destination IP, Source Port, Destination Port, Protocol, Tos, Ingress Interface and Egress Interface. Flow records are exported to a flow collector where they are ingested and information orientated to the engineer’s purposes are displayed.

Measurement

Measurement has always been how the IT industry expresses power and competency. However, a formula used to reflect power and ability changes when a technology design undergoes a paradigm shift.

For example, when expressing how fast a computer is we used to measure the CPU clock speed. We believed that the higher the clock speed the more powerful the computer. However, when multi-core chips were introduced the CPU power and speed dropped but the CPU in fact became more powerful. The primary clock speed measurement indicator became secondary to the ability to multi-thread.

The flows-per-second yardstick is misleading as it incorrectly reflects the actual power and capability of a flow collector to capture and process flow data and it has become prone to marketing exaggeration.

Flow Capture Rate

Flow capture rate ability is difficult to measure and to quantify a products scalability. There are various factors that can dramatically impact the ability to collect flows and to retain sufficient flows to perform higher-end diagnostics.

It’s important to look not just at flows-per-second but at the granularity retained per minute (flow retention rate), the speed and flexibility of alerting, reporting, forensic depth and diagnostics and the scalability when impacted by high-flow-variance, sudden-bursts, number of devices and interfaces, the speed of reporting over time, the ability to retain short-term and historical collections and the confluence of these factors as it pertains to scalability of the software as a whole.

Scalable NetFlow and flow retention rates are particularly critical to determine as appropriate granularity is needed to deliver the visibility required to perform Anomaly Detection, Network Forensics, Root Cause Analysis, Billing substantiation, Peering Analysis and Data retention compliance.

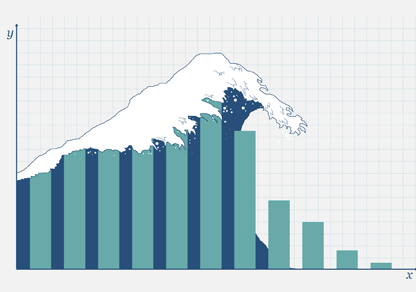

The higher the flows-per-second and the flow-variance the more challenging it becomes to achieve a high flow-retention-rate to archive and retain flow records in a data warehouse.

A vendor’s capability statement might reflect a high flows-per-second consumption ability but many flow software tools have retention rate limitations by design.

It can mean that irrespective of achieving a high flow collection rate the netflow analyzer might only be capable of physically archiving 500 flows per minute. Furthermore, these flows are usually the result of sorting the flow data by top bytes to identify “Top 10” bandwidth abusers. Netflow products of this kind can be easily identified because they often tend to offer benefits orientated primarily to identifying bandwidth abuse or network performance monitoring.

Identifying bandwidth abusers is of course a very important benefit of a netflow analyzer. However, it has a marginal benefit today where a large amount of the abuse and risk is caused by many small flows.

These small flows usually fall beneath the radar screen of many netflow analysis products. Many abuses like DDoS, p2p, botnets and hacker or insider data exfiltration continue to occur and can at minimum impact the networking equipment and user experience. Lack of ability to quantify and understand small flows creates great risk leaving organizations exposed.

Scalability

This inability to scale in short-term or historical analysis severely impacts a flow monitoring product’s ability to collect and retain critical information required in today’s world where copious data has created severe network blind spots.

To qualify if a tool is really suitable for the purpose, you need to know more about the flows-per-second collection formula being provided by the vendor and some deeper investigation should be carried out to qualify the claims.

With this in mind here are 3 key questions to ask your NetFlow vendor to understand what their collection scalability claims really mean:

- How many flows can be collected per second?

- Qualify if the flows per second rate provided is a burst rate or a sustained rate.

- Ask how the collection and retention rates might be affected if the flows have high-flow variance (e.g. a DDoS attack).

- How is the collection, archiving and reporting impacted when flow variance is increased by adding many devices and interfaces and distinct IPv4/IPv6 conversations and test what degradation in speed can you expect after it has been recording for some time.

- Ask how the collection and retention rates might change if adding additional fields or measurements to the flow template (e.g. MPLS, MAC Address, URL, Latency)

- How many flow records can be retained per minute?

- Ask how the actual number of records inserted into the data warehouse per minute can be verified for short-term and historical collection.

- Ask what happens to the flows that were not retained.

- Ask what the flow retention logic is. (e.g. Top Bytes, First N)

- What information granularity is retained for both short-term and historically?

- Does the data’s time granularity degrade as the data ages e.g. 1 day data retained per minute, 2 days retained per hour 5 days retained per quarter

- Can you control the granularity and if so for how long?

Remember – Rate of collection does not translate to information retention.

Do you know what’s really stored in the software’s database? After all you can only analyze what has been retained (either in memory or on disk) and it is that information retention granularity that provides a flow products benefits.