US: December 13 of 2020 was an eye-opener worldwide as Solarwinds software Orion, was hacked using a trojanized update known as SUNBURST backdoor vulnerability. The damage reached thousands of customers, many of which are world leaders in their markets like Intel, Microsoft, Lockheed, Visa, and several USA governmental agencies. The extent of the damage has not been fully quantified as still more is being learned, nevertheless, the fallout includes real-world harm.

The recent news of the SolarWinds Orion hack is very unfortunate. The hack has left governments and customers who used the SolarWinds Orion tools especially vulnerable and the fallout will still take many months to be recognized. This is a prime example of an issue where a flow metadata tool has the inability to retain sufficient records, causing ineffective intelligence, and that the inability to reveal hidden issues and threats is now clearly impacting organizations’ and government networks and connected assets.

Given what we already know and that more is still being learned, it makes good sense to investigate an alternative solution.

What Is the SUNBURST Trojan Attack?

SUNBURST, as named by FireEye, is a kind of malware that acts as a trojan horse designed to look like a safe and trustworthy update for Solarwinds customers. To accomplish such infiltration to seemingly well-protected organizations, the hackers had to first infiltrate the Solarwinds infrastructure. Once Solarwinds was successfully hacked, the bad actors could now rely on the trust between Solarwinds and the targeted organizations to carry out the attack. The malware, which looked like a routine update, was in fact creating a back door, compromising the Solarwinds Orion software and any customer who updates their system.

How was SUNBURST detected?

Initially, SUNBURST malware was completely undetected for some time. The attackers started to install a remote access tool malware into the Solarwinds Orion software all the way back in March of 2020, essentially trojaning them. On December 8, 2020, FireEye discovered their own red team tools have been stolen and started to investigate while reporting the event to the NSA. The NSA, also a Solarwinds software user, who is responsible for the USA cybersecurity defense, was unaware of the hack at the time. A few days later, as soon as the information became more public, different cybersecurity firms began to work on reverse engineering and analyzing the hack.

IT’S WHAT WE DON’T SEE THAT POSES THE BIGGEST THREATS AND INVISIBLE DANGERS!

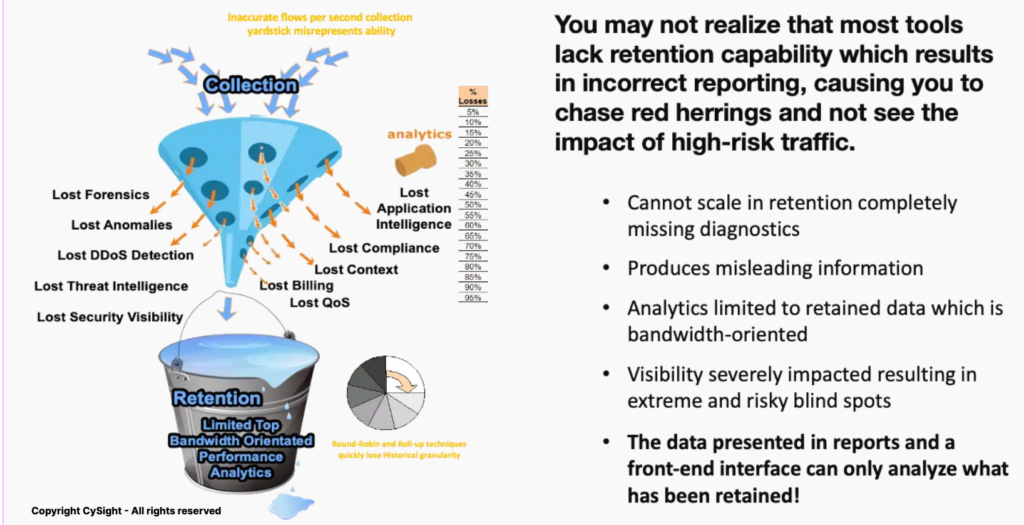

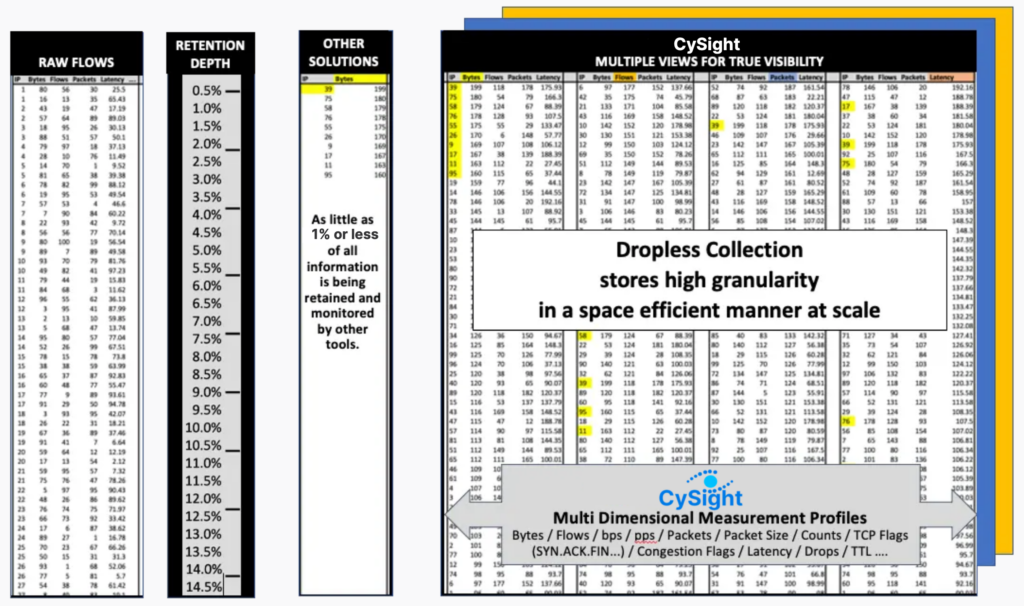

You may be surprised to learn that most well-known tools lack the REAL Visibility that could have prevented attacks on a network and its local and cloud-connected assets. There are some serious shortcomings in the base designs of other flow solutions that result in their inability to scale in retention. This is why smart analysts are realizing that Threat Intelligence and Flow Analytics today is all about having access to long term granular intelligence.

From a forensics perspective, you would appreciate that you can only analyze the data you retain, and with large and growing network and cloud data flows most tools (regardless of their marketing claims) actually cannot scale in retention and choose to drop records in lieu of what they believe is salient data.

A simple way to think about this is if you could imagine trying to collect water from a blasting fire hose into a drinking cup. You just simply cannot collect very much!

Many engineers build scripts to try to attain the missing visibility and do a lot of heavy lifting and then finally come to the realization that no matter how much lifting you do that if the data ain’t there you can’t analyze it.

How does CySight hunt SUNBURST and other Malware?

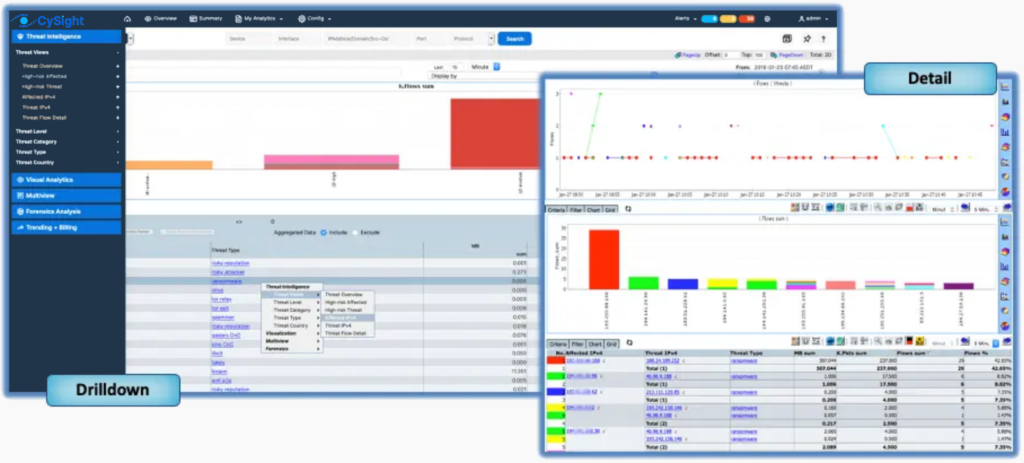

It’s often necessary to try and look back with new knowledge that we become aware of to analyze.

For a recently discovered Ransomware or Trojan, such as SUNBURST, it is helpful to see if it’s been active in the past and when it started. Another example is being able to analyze all the related traffic and qualify how long a specific user or process has been exfiltrating an organization’s Intellectual Property and quantify the risk.

SUNBURST enabled the criminals to install a Remote Access Trojan (RAT). RATs, like most Malware, are introduced as part of legitimate-looking files. Once enabled they allow the hacker to view a screen or a terminal session enabling them to look for sensitive data like customer’s credit cards, intellectual property or sensitive company or government secrets.

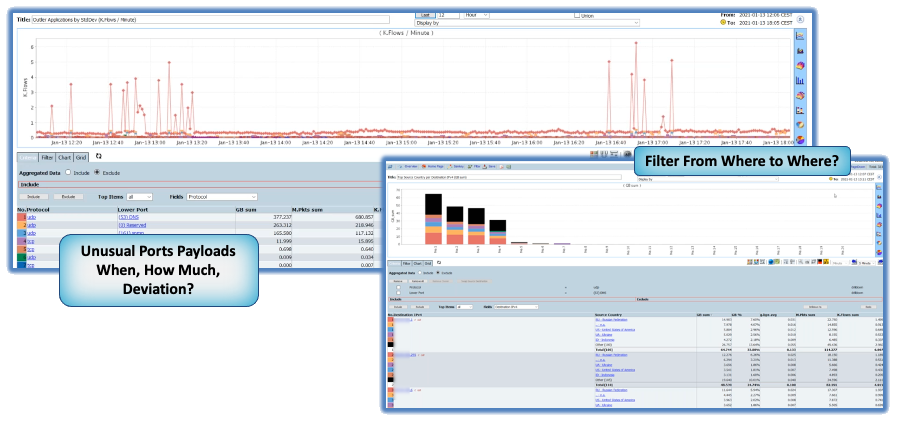

Even though many antivirus products can identify many RAT signatures, the software and protocols used to view remotely and to exfiltrate files continues to evade many malware detection systems. We must therefore turn to traffic analytics and machine learning to identify traffic behaviors and data movements that are out of the ordinary.

Anonymity by Obscurity

In order to evade detection, hackers try to hide in plain sight and use protocols that are not usually blocked like DNS, HTTP, and Port 443 to exfiltrate your data.

Many methods are used to exfiltrate your data. An often-used method is to use p2p technologies to break files into small pieces and slowly send the data unnoticed by other monitoring systems. Due to CySight’s small footprint Dropless Collection you can easily identify sharding and our anomaly detection will identify the outlier traffic and quickly bring it to your attention. When used in conjunction with a packet broker partner such as Keysight, Gigamon, nProbe or other supported packet metadata exporter, CySight provides the extreme application intelligence to help you with complete visibility to control the breach.

Identifying exposure

In todays connected world every incident has a communications component

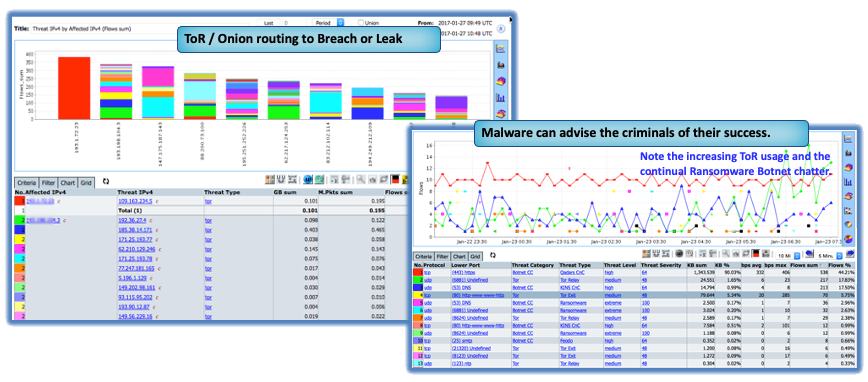

You need to keep in mind that all Malware needs to “call home” and today this is going to be through onion routed connections, encrypted VPNs, or via zombies that have been seeded as botnets making it difficult if not impossible to identify the hacking teams involved which may be personally, commercially or politically motivated bad actors.

Multi-focal threat hunting

Threat hunting for SUNBURST or other Malware requires multi-focal analysis at a granular level that simply cannot be attained by sampling methods. It does little good to be alerted to a possible threat without having the detail to understand context and impact. The Hacker who has control of your system will likely install multiple back-doors on various interrelated systems so they can return when you are off guard.

CySight Turbocharges Flow and Cloud analytics for SecOps and NetOps

As with all CySight analytics and detection, you don’t have to do any heavy lifting. We do it all for you!

There is no need to create or maintain special groups with Sunburst or other Malware IP addresses or domains. Every CySight instance is built to keep itself aware of new threats that are automatically downloaded in a secure pipe from our Threat Intelligence qualification engine that collects, collates and categorizes threats from around the globe or from partner threat feeds.

CySight Identifies your systems conversing with Bad Actors and allows you to back track through historical data to see how long it’s been going on.

Using Big Data threat feeds collated from multiple sources, thousands of IPs of bad reputation are correlated in real-time with your traffic against threat data that is freshly derived from many enterprises and sources to provide effective visibility of threats and attackers.

Cyber feedback

Global honeypots

Threat feeds

Crowd sources

Active crawlers

External 3rd Party

So how exactly do you go about turbocharging your Flow and Cloud metadata?

CySight software is capable of the highest level of granularity, scalability, and flexibility available in the network and cloud flow metadata market. Lack of granular visibility is one of, if not the main flaw in such products today as they retain as little as 2% to 5% of all information collected, due to inefficient design, severely impacting visibility and risk as a result of missing and misleading analytics, costing organizations greatly.

CySight’s Intelligent Visibility, Dropless Collection, automation, and machine intelligence reduce the heavy lifting in alerting, auditing, and discovering your network making performance analytics, anomaly detection, threat intelligence, forensics, compliance, zero trust and IP accounting and mitigation a breeze!