The increasing density, complexity and expanse of modern networking environments have fueled the ongoing debate around which network analysis and monitoring tools serve the needs of the modern engineer best – placing Packet Capture and NetFlow Analysis to perform NDR at center-stage of the conversation.

Granted, when performing analysis of unencrypted traffic both can be extremely valuable tools in ongoing efforts to maintain and optimize complex environments, but as an engineer, I tend to focus on solutions that give me the insights I need without too much cost on my resources, while complementing my team’s ability to maintain and optimize the environments we support.

So with this in mind, let’s take a look at how NetFlow, in the context of the highly-dense networks we find today, delivers three key requirements network teams rely on for reliable end-to-end performance monitoring of their environments.

A NetFlow deployment won’t drain your resources

Packet Capture, also known as Deep Packet Inspection (DPI), once rich in network metrics has finally failed due to encryption and a segment based approach making it expensive to deploy and maintain. It requires a requires sniffing devices and agents throughout the network, which invariably require a huge of maintenance during their lifespan.

In addition, the amount of space required to store and analyze packet data makes it an inefficient an inelegant method of monitoring or forensic analysis. Combine this with the levels of complexity networks can reach today, and overall cost and maintenance associated with DPI can quickly become unfeasible. In the case of NetFlow, its wide vendor support across virtually the entire networking landscape makes almost every switch, router, vmware, GCP cloud, Azure Cloud, AWS cloud, vmWare velocloud or firewall a NetFlow / IPFIX / sflow / ixflow “ready” device. Devices’ built-in readiness to capture and export data-rich metrics makes it easy for engineers to deploy and utilize . Also, thanks to its popularity, CySight’s NetFlow analyzer provides varying feature-sets with enriched vendor specific flow fields are available for security operations center (SOC) network operations center (NOC) teams to gain full advantage of data-rich packet flows.

Striking the balance between detail and context

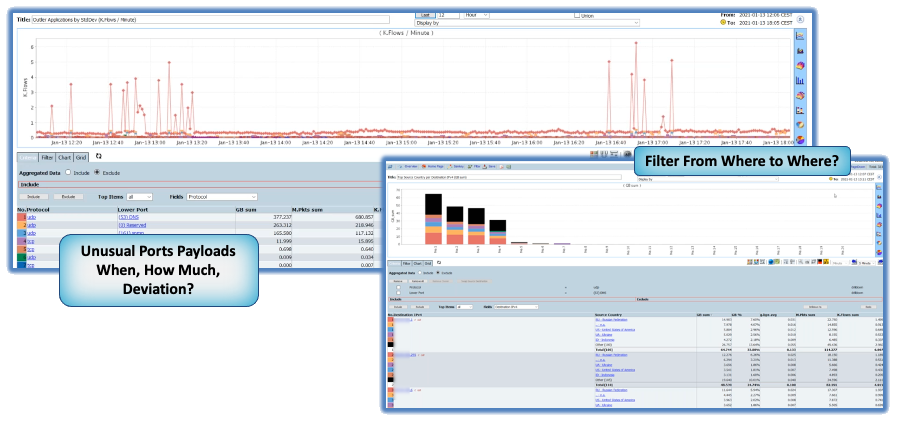

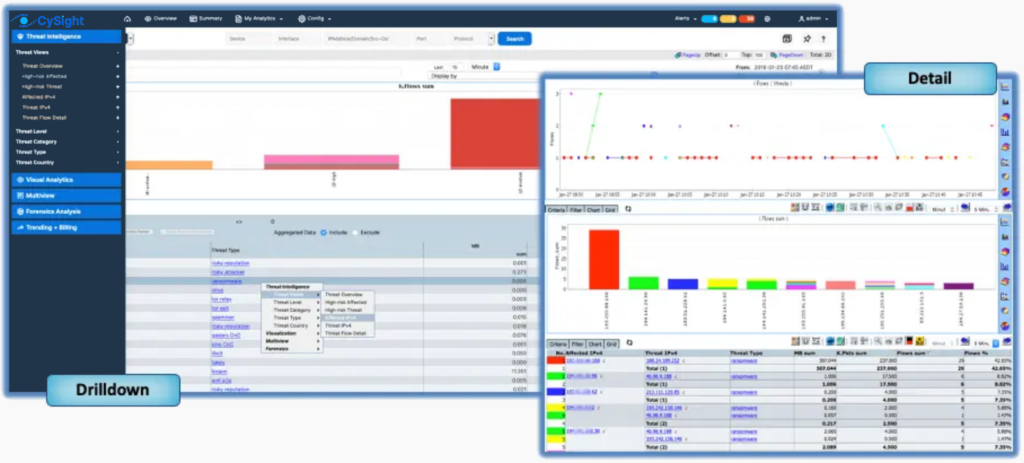

Considering how network-dependent and widespread applications have become in recent years, NetFlow, IPFIX, sFlow and ixFlow’s ability to provide WAN-wide metrics in near real-time makes it a suitable troubleshooting companion for engineers. Add to this enriched context enables a very complete qualification of impact from standard traffic analysis perspective as well as End point Threat views and Machine Learning and AI-Diagnostics.

Latest Flow methods extend the wealth of information as it collects via a template-based collection scheme, it strikes the balance between detail and high-level insight without placing too much demand on networking hardware – which is something that can’t be said for Deep Packet Inspection. Netflow’s constant evolution alongside the networking landscape is seeing it used as a complement to solutions such as Cisco’s NBAR , Packet Brokers such as KeySight, Ixia, Gigamon, nProbe, NetQuest, Niagra Networks, CGS Tower Networks, nProbe and other Packet Broker solutions have recognized that all they need to export flexible enriched flow fields to reveal details at the packet level.

NetFlow places your environment in greater context

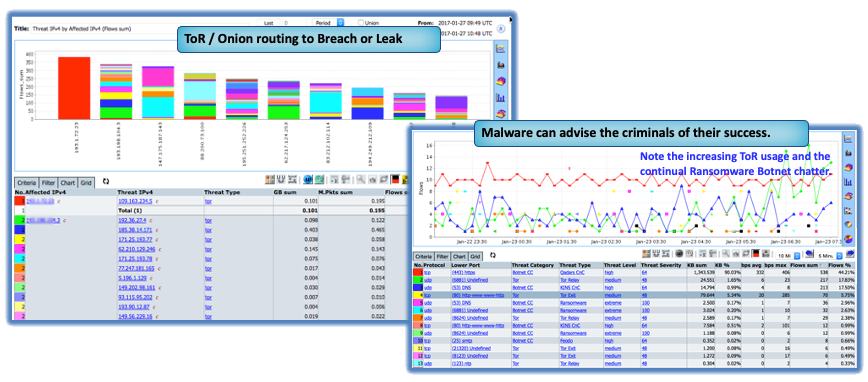

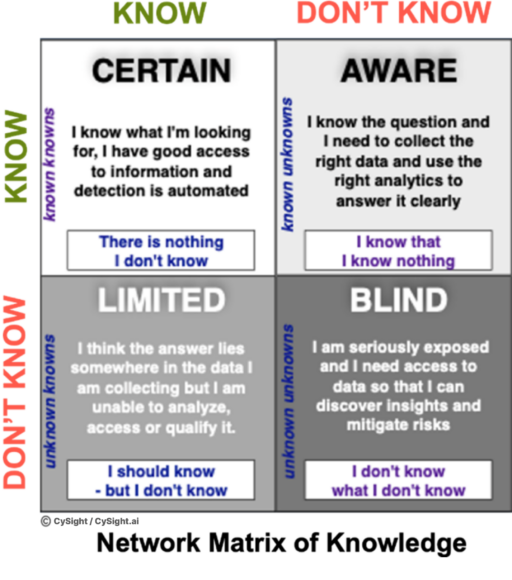

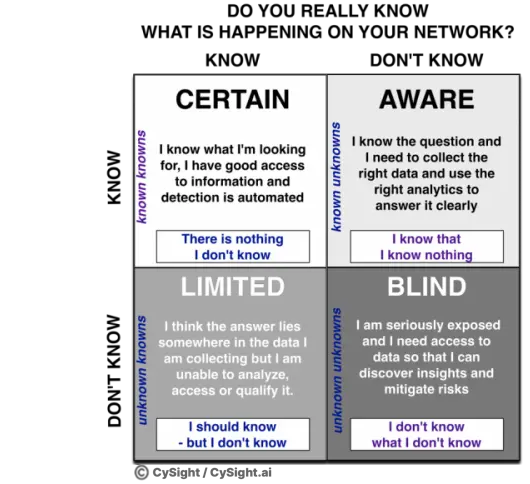

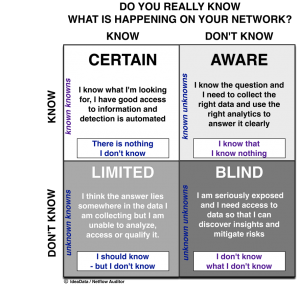

Context is a chief area where Granular NetFlow beats out Packet Capture since it allows engineers to quickly locate root causes relating to Cyber Security, Threat Hunting, Root Cause and Performance by providing a more situational view of the environment, its data-flows, bottleneck-prone segments, application behavior, device sessions and so on.

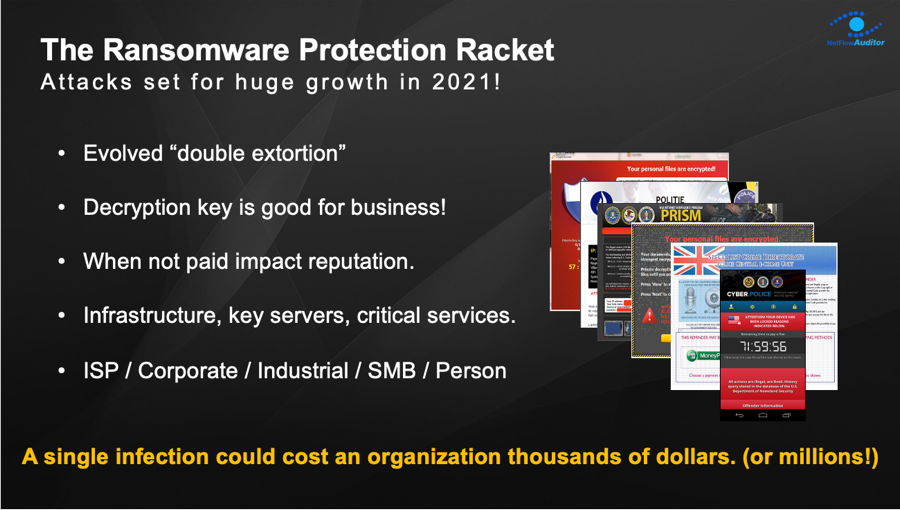

One could argue that Deep Packet Inspection (DPI) is able to provide much of this information too, but as networks today are over 98% encrypted even using certificates won’t give engineers the broader context around the information it presents, thus hamstringing IT teams from detecting anomalies that could be subscribed to a number of factors such as cyber threats, untimely system-wide application or operating system updates or a cross-link backup application pulling loads of data across the WAN during operational hours.

So does NetFlow make Deep Packet Inspection obsolete?

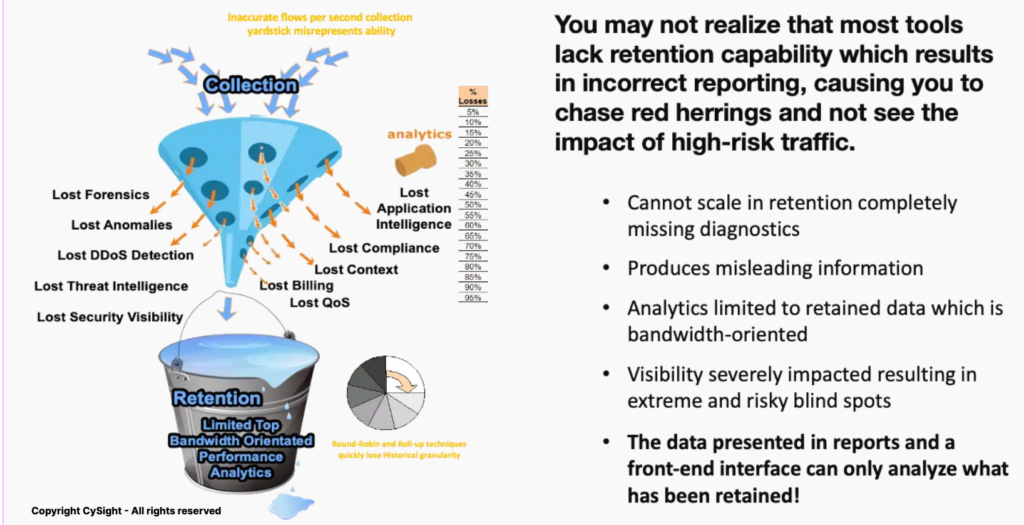

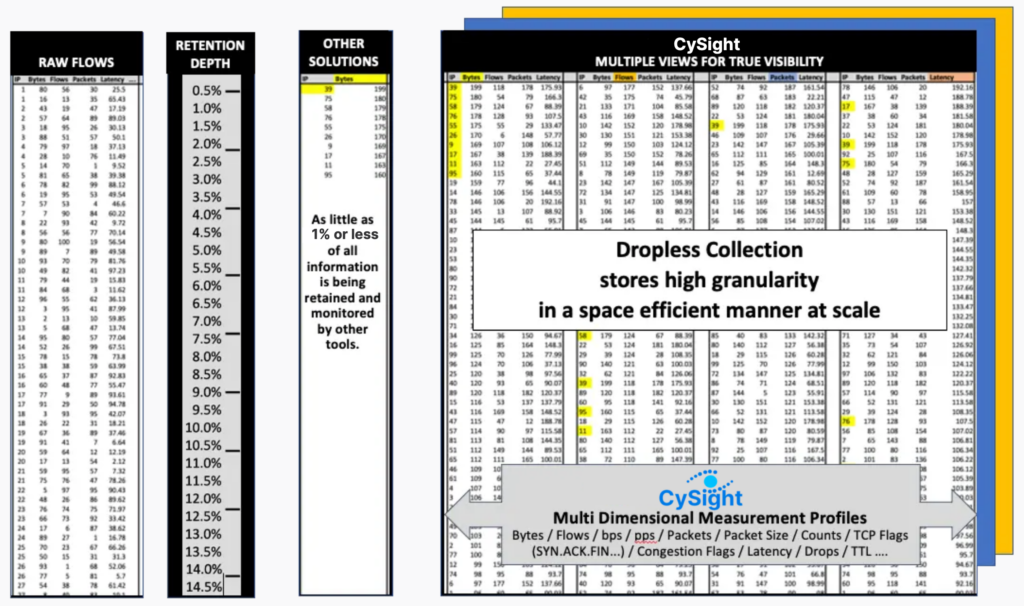

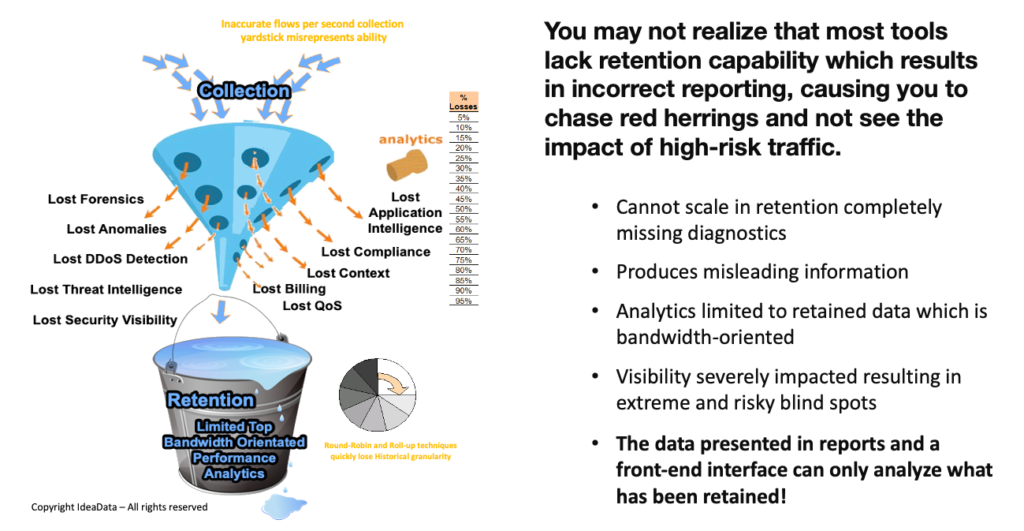

Both Deep Packet Inspection (DPI) and legacy Netflow Analyzers cannot scale in retention so when comparing those two genres of solutions the only win a low end netflow analyzer solution will have against a DPI solution is that DPI is segment based so flow solution is inherently better as its agentless.

However, using NetFlow to identify an attack profile or illicit traffic can only be attained when flow retention is deep (granular) . However, NetFlow strikes that perfect balance between detail and context and gives SOC’s and NOCs intelligent insights that reveals broader factors that can influence your network’s ability to perform.

Gartner’s assertion that a balance of 80% NetFlow monitoring coupled with 20% Packet Capture as the perfect combination of performance monitoring is false due to encryption’s rise but it is correct to attest to NetFlow’s growing prominence as the monitoring tool of choice and as it and its various iterations such sFlow, IPFIX, ixFlow, Flow logs and others flow protocols continue to expand the breadth of context it provides network engineers, that margin is set to increase in its favor as time.