IT’S WHAT YOU DON’T SEE THAT POSES THE BIGGEST THREATS AND INVISIBLE DANGERS.

The main issue is a lack of visibility into all aspects of physical network and cloud network usage, as well as increasing compliance, service level management, regulatory mandates, a rising level of sophistication in cybercrime, and increasing server virtualization.

With appropriate visibility and context, a variety of network issues can be resolved and handled by understanding the causes of network slowdowns and outages, detecting cyber-attacks and risky traffic, determining the origin and nature, and assessing the impact.

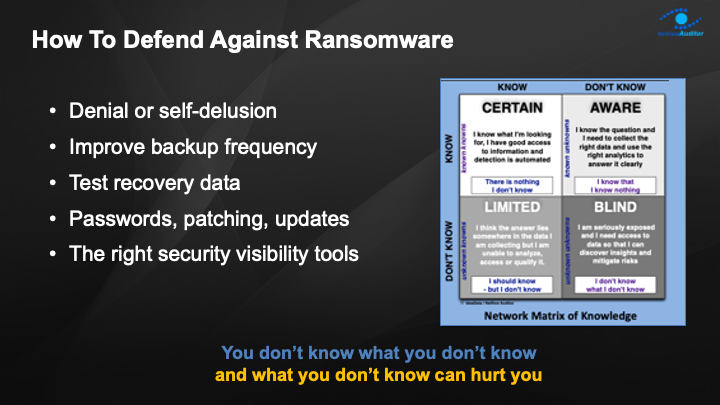

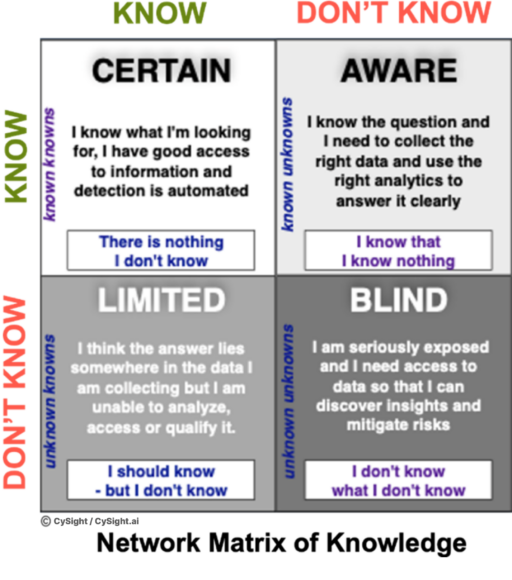

It’s clear that in today’s work-at-home, cyberwar, ransomware world, having adequate network visibility in an organization is critical, but defining how much visibility is considered “right” visibility is becoming more difficult, and more often than not even well-seasoned professionals make incorrect assumptions about the visibility they think they have. These misperceptions and malformed assumptions are much more common than you would expect and you would be forgiven for thinking you have everything under control.

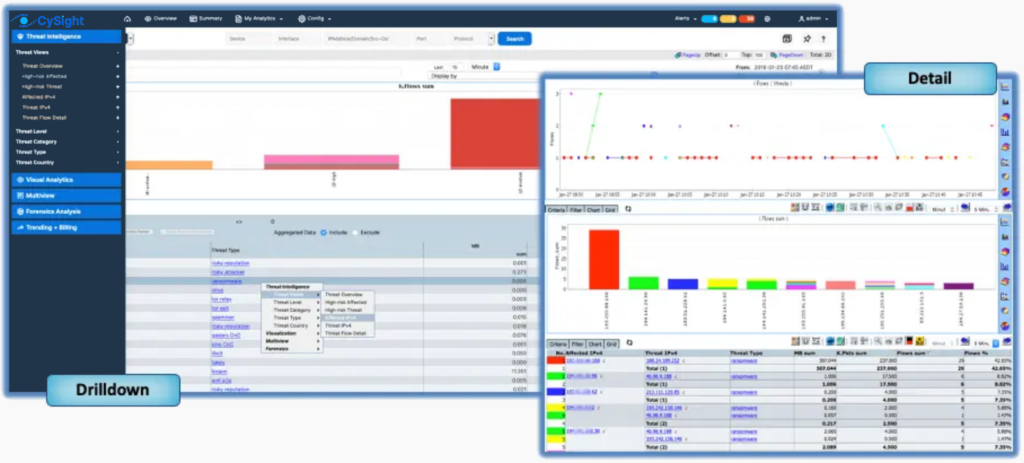

When it comes to resolving IT incidents and security risks and assessing the business impact, every minute counts. The primary goal of Predictive AI Baselining coupled with deep contextual Network Forensics is to improve the visibility of Network Traffic by removing network blindspots and identifying the sources and causes of high-impact traffic.

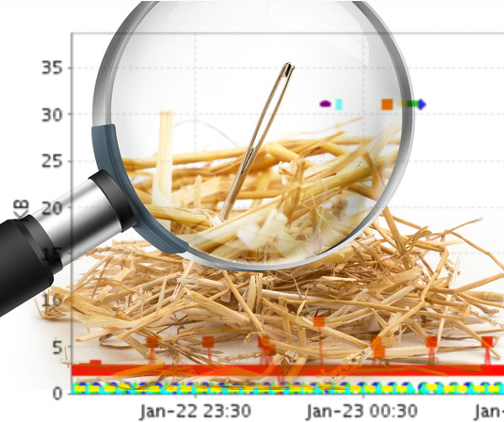

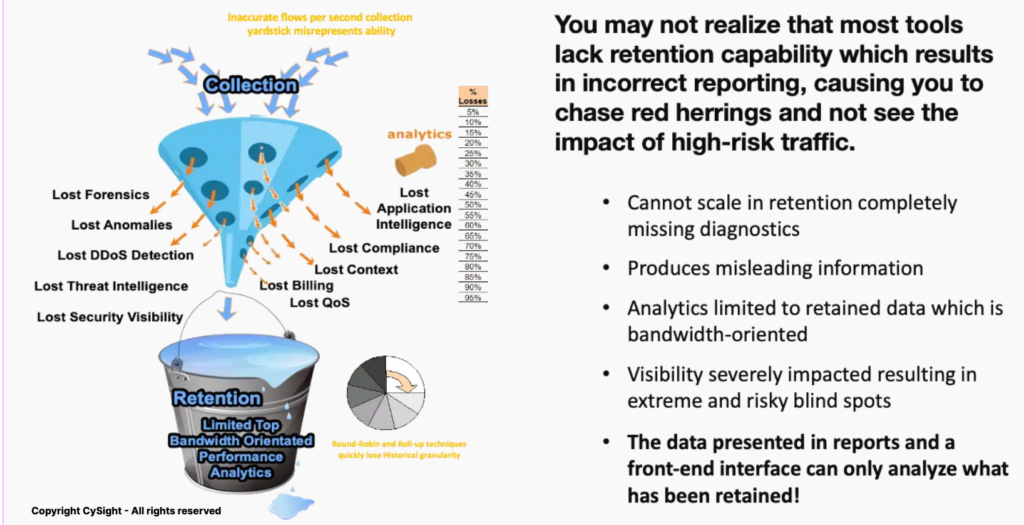

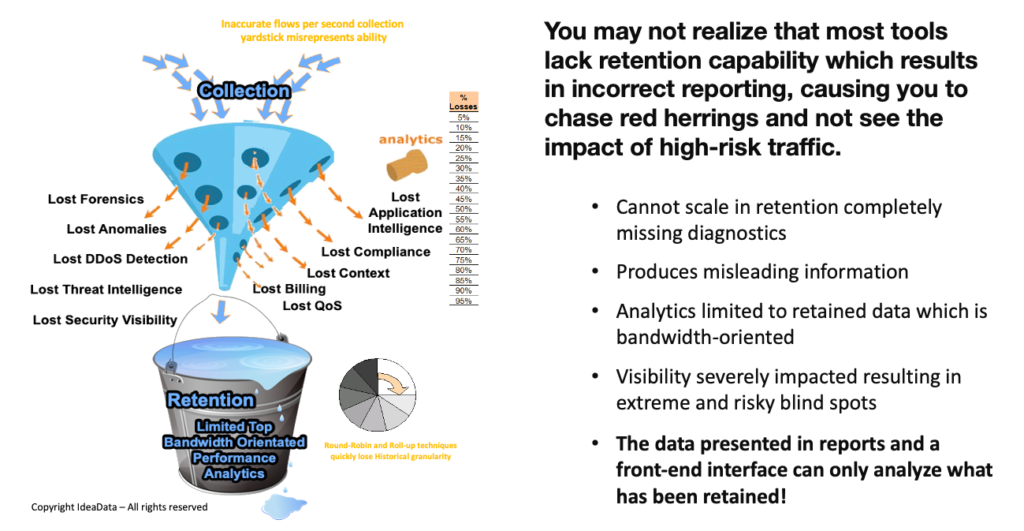

Inadequate solutions (even the most well-known) mislead you into a false level of comfort but as they tend to only retain the top 2% or 5% of network communications frequently cause false positives and red herrings. Cyber threats can come from a variety of sources. These could be the result of new types of crawlers or botnets, infiltration and ultimately exfiltration that can destroy a business.

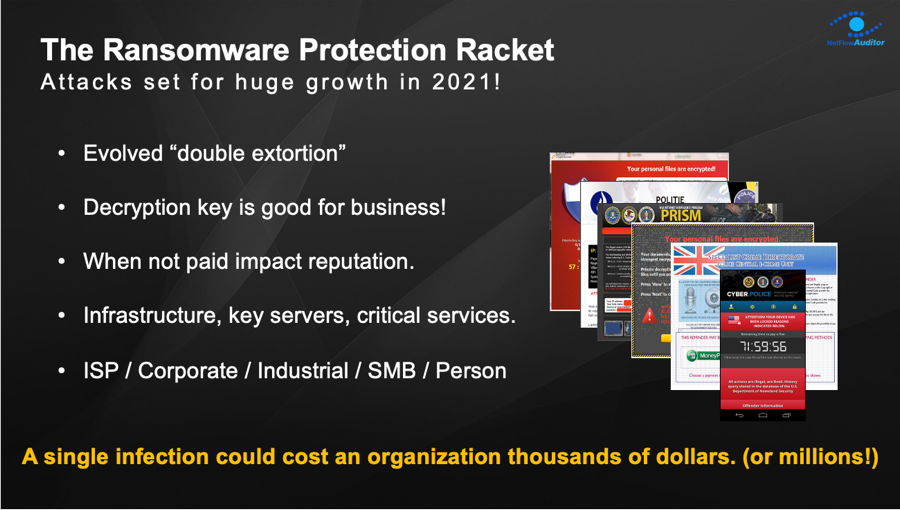

Networks are becoming more complex. Because of negligence, failing to update and patch security holes, many inadvertent threats can open the door to malicious outsiders. Your network could be used to download or host illegal materials, or it could be used entirely or partially to launch an attack. Ransomware attacks are still on the rise, and new ways to infiltrate organizations are being discovered. Denial of Service (DoS) and distributed denial of service (DDoS) attacks continue unabated, posing a significant risk to your organization. Insider threats can also occur as a result of internal hacking or a breach of trust, and your intellectual property may be slowly leaked as a result of negligence, hacking, or being compromised by disgruntled employees.

Whether you are buying a phone a laptop or a cyber security visibility solution the same rule applies and that is that marketers are out to get your hard-earned cash by flooding you with specifications and solutions whose abilities are radically overstated. Machine Learning (ML) and Artificial Intelligence (AI) are two of the most recent to join the acronyms. The only thing you can know for sure dear cyber and network professional reader is that they hold a lot of promise.

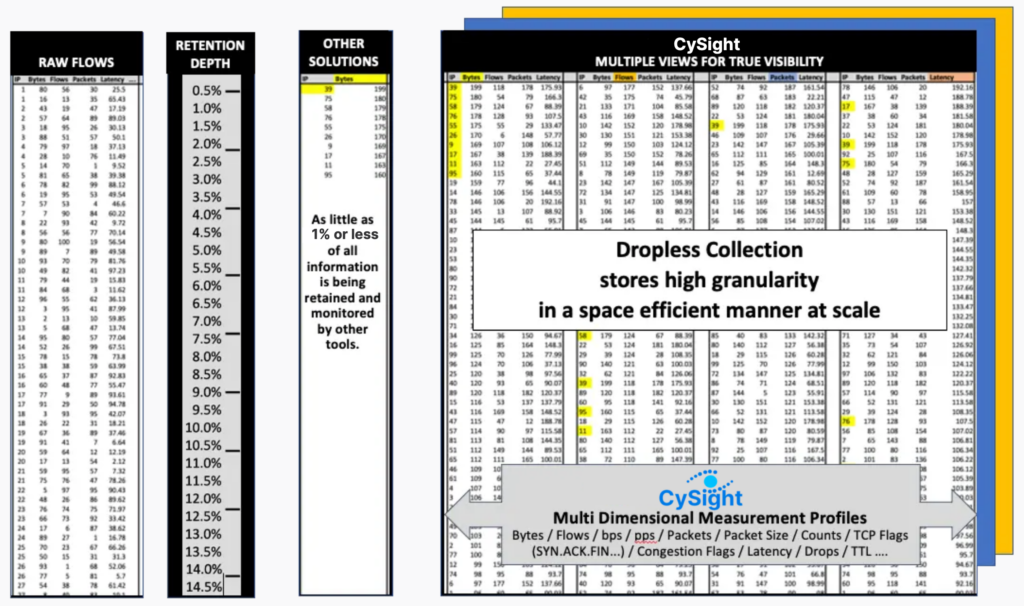

One thing I can tell you from many years of experience in building flow analytics, threat intelligence, and cyber security detection solutions is that without adequate data your results become skewed and misleading. Machine Learning and AI enable high-speed detection and mitigation but without Granular Analytics (aka Big Data) you won’t know what you don’t know and neither will your AI!

In our current Covid world we have all come to appreciate, in some way, the importance of big data, ML and AI that if properly applied, just how quickly it can help mitigate a global health crisis. We only have to look back a few years when drug companies didn’t have access to granular data the severe impact that poor data had on people’s lives. Thalidomide is one example. In the same way, when cyber and network visibility solutions are only surface scraping data information will be incorrect and misleading and could seriously impact your network and the livelihoods of the people you work for and together with.

The Red Pill or The Blue Pill?

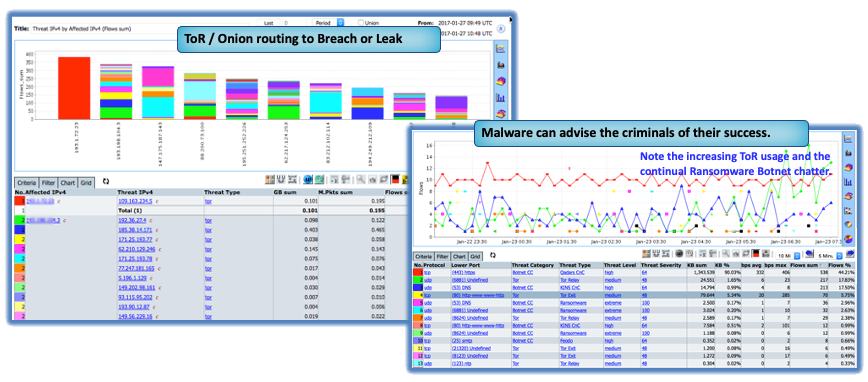

The concept of flow or packet-based analytics is straightforward, yet they have the potential to be the most powerful tools for detecting ransomware and other network and cloud-related concerns. All communications leave a trail in the flow data, and with the correct tools, you can recover all evidence of an assault, penetration, or exfiltration.

Not all analytic systems are made equal, and the flow/packet ideals become unattainable for other tools because of their difficulty to scale in retention. Even well-known tools have serious flaws and are limited in their ability to retain complete records, which is often overlooked. They don’t effectively provide the visibility of the blindspots they claimed.

As already pointed out, over 95% of network and deep packet inspection (DPI) solutions struggle to retain even 2% to 5% of all data captured in medium to large networks, resulting in completely missing diagnoses and delivering significantly misleading analytics that leads to misdiagnosis and risk!

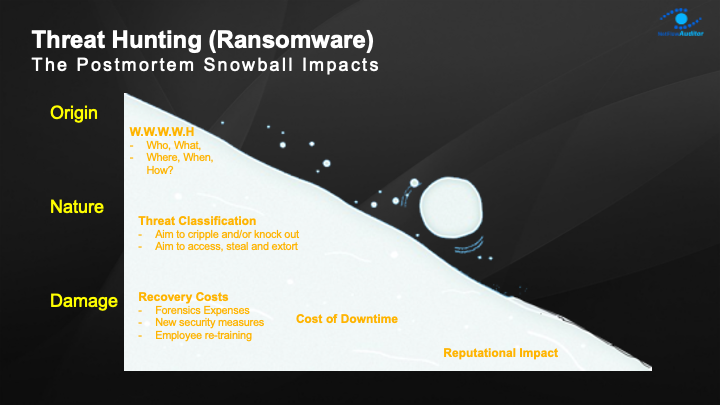

It is critical to have the context and visibility necessary to assess all relevant traffic to discover concurrent intellectual property exfiltration and to quantify and mitigate the risk. It’s essential to determine whether a newly found Trojan or Ransomware has been active in the past and when it entered and what systems are still at risk.

Threat hunting demands multi-focal analysis at a granular level that sampling, and surface flow analytics methods just cannot provide. It is ineffective to be alerted to a potential threat without the context and consequence. The Hacker who has gained control of your system is likely to install many backdoors on various interconnected systems to re-enter when you are unaware. As Ransomware progresses it will continue to exploit weaknesses in Infrastructures.

Often those most vulnerable are those who believe they have the visibility to detect.

Post-mortem analysis of incidents is required, as is the ability to analyze historical behaviors, investigate intrusion scenarios and potential data breaches, qualify internal threats from employee misuse, and quantify external threats from bad actors.

The ability to perform network forensics at a granular level enables an organization to discover issues and high-risk communications happening in real-time, or those that occur over a prolonged period such as data leaks. While standard security devices such as firewalls, intrusion detection systems, packet brokers or packet recorders may already be in place, they lack the ability to record and report on every network traffic transfer over the long term.

According to industry analysts, enterprise IT security necessitates a shift away from prevention-centric security strategies and toward information and end-user-centric security strategies focused on an infrastructure’s endpoints, as advanced targeted attacks are poised to render prevention-centric security strategies obsolete and today with Cyberwar a reality that will impact business and government alike.

As every incident response action in today’s connected world includes a communications component, using an integrated cyber and network intelligence approach provides a superior and cost-effective way to significantly reduce the Mean Time To Know (MTTK) for a wide range of network issues or risky traffic, reducing wasted effort and associated direct and indirect costs.

Understanding The shift towards Flow-Based Metadata

for Network and Cloud Cyber-Intelligence

- The IT infrastructure is continually growing in complexity.

- Deploying packet capture across an organization is costly and prohibitive especially when distributed or per segment.

- “Blocking & tackling” (Prevention) has become the least effective measure.

- Advanced targeted attacks are rendering prevention‑centric security strategies obsolete.

- There is a Trend towards information and end‑user centric security strategies focused on an infrastructure’s end‑points.

- Without making use of collective sharing of threat and attacker intelligence you will not be able to defend your business.

So what now?

If prevention isn’t working, what can IT still do about it?

- In most cases, information must become the focal point for our information security strategies. IT can no longer control invasive controls on user’s devices or the services they utilize.

Is there a way for organizations to gain a clear picture of what transpired after a security breach?

- Detailed monitoring and recording of interactions with content and systems. Predictive AI Baselining, Granular Forensics, Anomaly Detection and Threat Intelligence ability is needed to quickly identify what other users were targeted, what systems were potentially compromised and what information was exfiltrated.

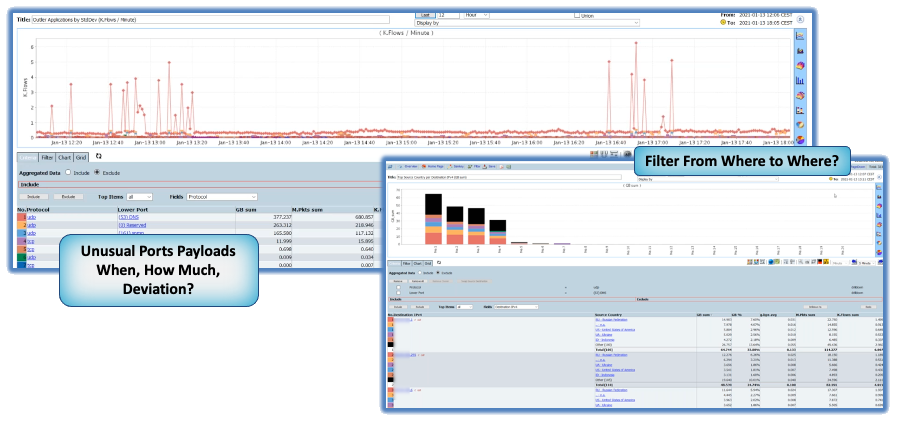

How do you identify attacks without signature-based mechanisms?

- Pervasive monitoring enables you to identify meaningful deviations from normal behavior to infer malicious intent. Nefarious traffic can be identified by correlating real-time threat feeds with current flows. Machine learning can be used to discover outliers and repeat offenders.

Summing up

Network security and network monitoring have gone a long way and jumped through all kinds of hoops to reach the point they have today. Unfortunately, through the years, cyber marketing has surpassed cyber solutions and we now have misconceptions that can do considerable damage to an organization.

The biggest threat is always the one you cannot see and hits you the hardest once it has established itself slowly and comfortably in a network undetected. Complete visibility can only be accessed through 100% collection and retention of all data traversing a network, otherwise even a single blindspot will affect the entire organization as if it were never protected to begin with. Just like a single weak link in a chain, cyber criminals will find the perfect access point for penetration.

Inadequate solutions that only retain the top 2% or 5% of network communications frequently cause false positives and red herrings. You need to have 100% access to your comms data for Full Visibility, but how can you be sure that you will?

You need free access to Full Visibility to unlock all your data and an Intelligent Predictive AI technology that can autonomously and quickly identify what’s not normal at both the macro and micro level of your network, cloud, servers, iot devices and other network connected assets.